Cross-Datacenter support in Keycloak

September 06 2017 by Marek Posolda

In Keycloak 3.3.0.CR1 we added basic setup for cross-datacenter (cross-site) replication. This blogpost covers some details about it. It consists of 2 parts:

- Some technical details and challenges, which we needed to address

- Example setup

If you're not interested in too much details, but rather want to try things, feel free to go directly to the example. Or viceversa :-)

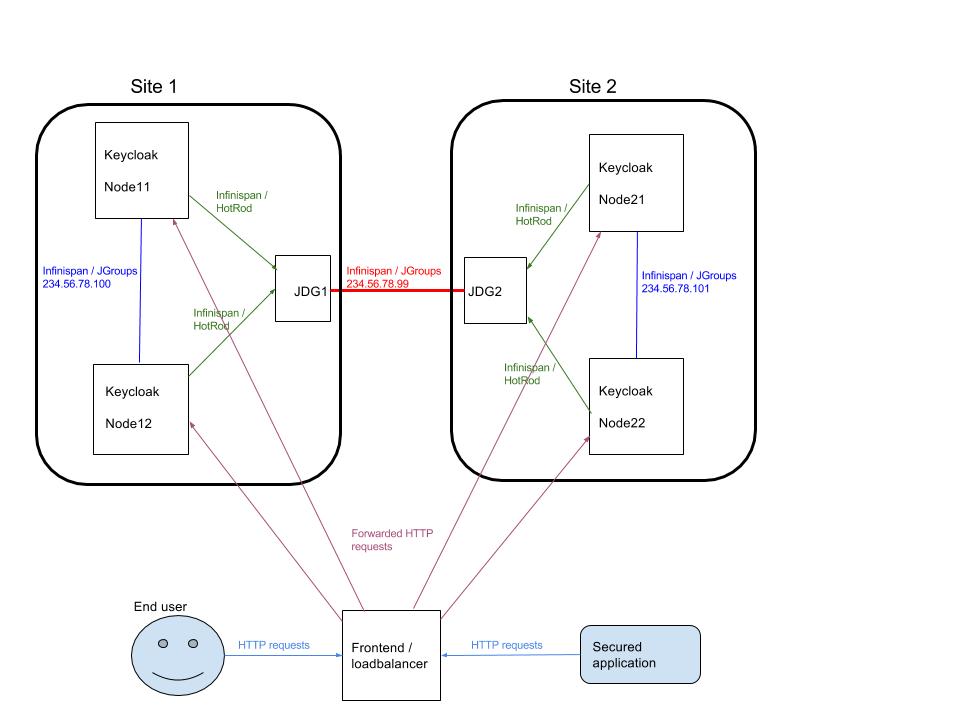

Here is the picture with the basic example architecture

Technical details

In typical scenario, end user's browser sends HTTP request to the frontend loadbalancer server. This is usually HTTPD or Wildfly with mod_cluster, NGinx, HA Proxy or other kind of software or hardware loadbalancer. Loadbalancer then forwards HTTP requests to the underlying Keycloak instances, which can be spread among multiple datacenters (sites). Loadbalancers typically offer support for sticky sessions, which means that loadbalancer is able to forward HTTP requests from one user always to the same Keycloak instance in same datacenter.There are also HTTP requests, which are sent from client applications to the loadbalancer. Those HTTP requests are backchannel requests. They are not seen by end user's browser and can't be part of sticky session between user and loadbalancer and hence loadbalancer can forward the particular HTTP request to any Keycloak instance in any datacenter. This is challenging as some OpenID Connect or SAML flows require multiple HTTP requests from both user and application. Because we can't reliably rely on sticky sessions, it means that some data need to be replicated between datacenters, so they are seen by subsequent HTTP requests during particular flow.

Authentication sessions

In Keycloak 3.2.0 we did some refactoring and introduced authentication sessions. There is separate infinispan cache authenticationSessions used to save data during authentication of particular user. This cache usually involves just browser and Keycloak server, not the application. Hence we usually can rely on sticky sessions and authenticationSessions cache content usually doesn't need to be replicated among datacenters.

Action tokens

In 3.2.0 we introduced also action tokens, which are used typically for scenarios when user needs to confirm some actions asynchronously by email. For example during forget password flow. The actionTokens infinispan cache is used to track metadata about action tokens (eg. which action token was already used, so it can't be reused second time) and it usually needs to be replicated between datacenters.

Database

Keycloak uses RDBMS to persist some metadata about realms, clients, users etc. In cross-datacenter setup, we assume that either both datacenters talk to same database or every datacenter has it's own database, but both databases are synchronously replicated. In other words, when Keycloak server in site 1 persists any data and transaction is commited, those data are immediatelly visible by subsequent DB transactions on site 2.Details of DB setup are out-of-scope of Keycloak, however note that many RDBMS vendors like PostgreSQL or MariaDB offers replicated databases and synchronous replication. Databases are not shown in the example picture above just to make it a bit simpler.

Caching and invalidation of persistent data

Keycloak uses infinispan for cache persistent data to avoid many unecessary requests to the database. Caching is great for save performance, however there is one additional challenge, that when some Keycloak server updates any data, all other Keycloak servers in all datacenters need to be aware of it, so they invalidate particular data from their caches. Keycloak uses local infinispan caches called realms, users and authorization to cache persistent data.We use separate cache work, which is replicated among all datacenters. The work cache itself doesn't cache any real data. It is defacto used just for sending invalidation messages between cluster nodes and datacenters. In other words, when some data is updated (eg. user "john" is updated), the particular Keycloak node sends the invalidation message to all other cluster nodes in same datacenter and also to all other datacenters. Every node then invalidates particular data from their local cache once it receives the invalidation message.

User sessions

There are infinispan caches sessions and offlineSessions, which usually need to be replicated between datacenters. Those caches are used to save data about user sessions, which are valid for the whole life of one user's browser session. The caches need to deal with the HTTP requests from the end user and from the application. As described above, sticky session can't be reliably used, but we still want to ensure that subsequent HTTP requests can see the latest data. Hence the data are replicated.

Brute force protection

Finally loginFailures cache is used to track data about failed logins (eg. how many times user john filled the bad password on username/password screen etc). It is up to the admin if he wants this cache to be replicated between datacenters. To have accurate count of login failures, the replication is needed. On the other hand, avoid replicating this data can save some performance. So if performance is more important then accurate counts of login failures, the replication can be avoided.

Communication details

Under the covers, there are multiple separate infinispan clusters here. Every Keycloak node is in the cluster with the other Keycloak nodes in same datacenter, but not with the Keycloak nodes in different datacenters. Keycloak node doesn't communicate directly with the Keycloak nodes from different datacenters. Keycloak nodes use external JDG (or infinispan server) for communication between datacenters. This is done through the Infinispan HotRod protocol.The infinispan caches on Keycloak side needs to be configured with the remoteStore, to ensure that data are saved to the remote cache, which uses HotRod protocol under the covers. There is separate infinispan cluster between JDG servers, so the data saved on JDG1 on site 1 are replicated to JDG2 on site 2.

Finally the receiver JDG server then notifies Keycloak servers in it's cluster through the Client Listeners, which is feature of HotRod protocol. Keycloak nodes on site 2 then update their infinispan caches and particular userSession is visible on Keycloak nodes on site 2 too.

Example setup

This is the example setup simulating 2 datacenters site 1 and site 2 . Each datacenter (site) consists of 1 infinispan server and 2 Keycloak servers. So 2 infinispan servers and 4 Keycloak servers are totally in the testing setup.

- Site1 consists of infinispan server jdg1 and 2 Keycloak servers node11 and node12 .

- Site2 consists of infinispan server jdg2 and 2 Keycloak servers node21 and node22 .

- Infinispan servers jdg1 and jdg2 forms cluster with each other and they are used as a channel for communication between 2 datacenters. Again, in production, there is also clustered DB used for replication between datacenters (each site has it's own DB), but that's not the case in the example, which would just use single DB.

- Keycloak servers node11 and node12 forms cluster with each other, but they don't communicate with any server in site2 . They communicate with infinispan server jdg1 through the HotRod protocol (Remote cache).

- Same applies for node21 and node22 . They have cluster with each other and communicate just with jdg2 server through the HotRod protocol.

Infinispan Server setup

1) Download Infinispan 8.2.6 server and unzip to some folder2) Add this into JDG1_HOME/standalone/configuration/clustered.xml into cache-container named clustered :

<cache-container name="clustered" default-cache="default" statistics="true">

...

<replicated-cache-configuration name="sessions-cfg" mode="ASYNC" start="EAGER" batching="false">

<transaction mode="NON_XA" locking="PESSIMISTIC"/>

</replicated-cache-configuration>

<replicated-cache name="work" configuration="sessions-cfg" />

<replicated-cache name="sessions" configuration="sessions-cfg" />

<replicated-cache name="offlineSessions" configuration="sessions-cfg" />

<replicated-cache name="actionTokens" configuration="sessions-cfg" />

<replicated-cache name="loginFailures" configuration="sessions-cfg" />

</cache-container>

3) Copy the server into the second location referred later as JDG2_HOME

4) Start server jdg1:

cd JDG1_HOME/bin ./standalone.sh -c clustered.xml -Djava.net.preferIPv4Stack=true \ -Djboss.socket.binding.port-offset=1010 -Djboss.default.multicast.address=234.56.78.99 \ -Djboss.node.name=jdg15) Start server jdg2:

cd JDG2_HOME/bin ./standalone.sh -c clustered.xml -Djava.net.preferIPv4Stack=true \ -Djboss.socket.binding.port-offset=2010 -Djboss.default.multicast.address=234.56.78.99 \ -Djboss.node.name=jdg26) There should be message in the log that nodes are in cluster with each other:

Received new cluster view for channel clustered: [jdg1|1] (2) [jdg1, jdg2]

Keycloak servers setup

1) Download Keycloak 3.3.0.CR1 and unzip to some location referred later as NODE11

2) Configure shared database for KeycloakDS datasource. Recommended to use MySQL, MariaDB or PostgreSQL. See Keycloak docs for more details

3) Edit NODE11/standalone/configuration/standalone-ha.xml :

3.1) Add attribute site to the JGroups UDP protocol:

<stack name="udp">

<transport site="&{jboss.site.name}" socket-binding="jgroups-udp" type="UDP">

3.2) Add output-socket-binding for remote-cache into socket-binding-group element:

<socket-binding-group ... >

...

<outbound-socket-binding name="remote-cache">

<remote-destination host="localhost" port="&{remote.cache.port}">

</remote-destination>

</outbound-socket-binding>

</socket-binding-group>

3.3) Add this module attribute into cache-container element of name keycloak :

<cache-container jndi-name="infinispan/Keycloak" module="org.keycloak.keycloak-model-infinispan" name="keycloak">3.4) Add the remote-store into work cache:

<replicated-cache mode="SYNC" name="work">

<remote-store cache="work" fetch-state="false" passivation="false" preload="false"

purge="false" remote-servers="remote-cache" shared="true">

<property name="rawValues">true</property>

<property name="marshaller">org.keycloak.cluster.infinispan.KeycloakHotRodMarshallerFactory</property>

</remote-store>

</replicated-cache>

3.5) Add the store like this into sessions cache:

<distributed-cache mode="SYNC" name="sessions" owners="1">

<store class="org.keycloak.models.sessions.infinispan.remotestore.KeycloakRemoteStoreConfigurationBuilder"

fetch-state="false" passivation="false" preload="false" purge="false" shared="true">

<property name="remoteCacheName">sessions</property>

<property name="useConfigTemplateFromCache">work</property>

</store>

</distributed-cache>

3.6) Same for offlineSessions and loginFailures caches:

<distributed-cache mode="SYNC" name="offlineSessions" owners="1">

<store class="org.keycloak.models.sessions.infinispan.remotestore.KeycloakRemoteStoreConfigurationBuilder"

fetch-state="false" passivation="false" preload="false" purge="false" shared="true">

<property name="remoteCacheName">offlineSessions</property>

<property name="useConfigTemplateFromCache">work</property>

</store>

</distributed-cache>

<distributed-cache mode="SYNC" name="loginFailures" owners="1">

<store class="org.keycloak.models.sessions.infinispan.remotestore.KeycloakRemoteStoreConfigurationBuilder"

fetch-state="false" passivation="false" preload="false" purge="false" shared="true">

<property name="remoteCacheName">loginFailures</property>

<property name="useConfigTemplateFromCache">work</property>

</store>

</distributed-cache>

3.7) The configuration of distributed cache authenticationSessions and other caches is left unchanged.

3.8) Optionally enable DEBUG logging into logging subsystem:

<logger category="org.keycloak.cluster.infinispan">

<level name="DEBUG">

</level></logger>

<logger category="org.keycloak.connections.infinispan">

<level name="DEBUG">

</level></logger>

<logger category="org.keycloak.models.cache.infinispan">

<level name="DEBUG">

</level></logger>

<logger category="org.keycloak.models.sessions.infinispan">

<level name="DEBUG">

</level></logger>

4) Copy the NODE11 to 3 other directories referred later as NODE12, NODE21 and NODE22.

5) Start NODE11 :

cd NODE11/bin ./standalone.sh -c standalone-ha.xml -Djboss.node.name=node11 -Djboss.site.name=site1 \ -Djboss.default.multicast.address=234.56.78.100 -Dremote.cache.port=12232 -Djava.net.preferIPv4Stack=true \ -Djboss.socket.binding.port-offset=3000

6) Start NODE12 :

cd NODE12/bin ./standalone.sh -c standalone-ha.xml -Djboss.node.name=node12 -Djboss.site.name=site1 \ -Djboss.default.multicast.address=234.56.78.100 -Dremote.cache.port=12232 -Djava.net.preferIPv4Stack=true \ -Djboss.socket.binding.port-offset=4000

The cluster nodes should be connected. This should be in the log of both NODE11 and NODE12:

Received new cluster view for channel hibernate: [node11|1] (2) [node11, node12]7) Start NODE21 :

cd NODE21/bin ./standalone.sh -c standalone-ha.xml -Djboss.node.name=node21 -Djboss.site.name=site2 \ -Djboss.default.multicast.address=234.56.78.101 -Dremote.cache.port=13232 -Djava.net.preferIPv4Stack=true \ -Djboss.socket.binding.port-offset=5000

It shouldn't be connected to the cluster with NODE11 and NODE12, but to separate one:

Received new cluster view for channel hibernate: [node21|0] (1) [node21]8) Start NODE22 :

cd NODE22/bin ./standalone.sh -c standalone-ha.xml -Djboss.node.name=node22 -Djboss.site.name=site2 \ -Djboss.default.multicast.address=234.56.78.101 -Dremote.cache.port=13232 -Djava.net.preferIPv4Stack=true \ -Djboss.socket.binding.port-offset=6000

It should be in cluster with NODE21 :

Received new cluster view for channel server: [node21|1] (2) [node21, node22]

9) Test:

9.1) Go to http://localhost:11080/auth/ and create initial admin user

9.2) Go to http://localhost:11080/auth/admin and login as admin to admin console

9.3) Open 2nd browser and go to any of nodes http://localhost:12080/auth/admin or http://localhost:13080/auth/admin or http://localhost:14080/auth/admin . After login, you should be able to see the same sessions in tab Sessions of particular user, client or realm on all 4 servers

9.4) After doing any change (eg. update some user), the update should be immediatelly visible on any of 4 nodes as caches should be properly invalidated everywhere.

9.5) Check server.logs if needed. After login or logout, the message like this should be on all the nodes NODEXY/standalone/log/server.log :

2017-08-25 17:35:17,737 DEBUG [org.keycloak.models.sessions.infinispan.remotestore.RemoteCacheSessionListener] (Client-Listener-sessions-30012a77422542f5) Received event from remote store. Event 'CLIENT_CACHE_ENTRY_REMOVED', key '193489e7-e2bc-4069-afe8-f1dfa73084ea', skip 'false'

Conclusion

This is just a starting point and the instructions are subject to change. We plan various improvements especially around performance. If you have any feedback regarding cross-dc scenario, please let us know on keycloak-user mailing list referred from Keycloak home page .