Tracing and additional metrics with OpenTelemetry

Collect traces and additional metrics using OpenTelemetry during development and performance runs.

|

This setup is only for load tests with the Keycloak Benchmark Project. To find more about how to use tracing in Keycloak, visit the Observability Guide on tracing. |

Setting up OpenTelemetry

OpenTelemetry is disabled by default.

It needs to be enabled via the customizing the setting KC_OTEL.

Depending on the setting KC_OTEL_SAMPLING_PERCENTAGE, only a percentage of traces might be recorded for performance reasons.

|

Contrary to other setups, this is not using an OpenTelemetry collector, but instead sends traces directly to Jaeger. |

Accessing OpenTelemetry traces

Grafana and Jaeger can be used to access the traces, and both are deployed by default.

Assuming the command minikube ip returned 192.168.39.39, Grafana is available on http://grafana.192.168.39.39.nip.io, and Jaeger is available on http://jaeger.192.168.39.39.nip.io

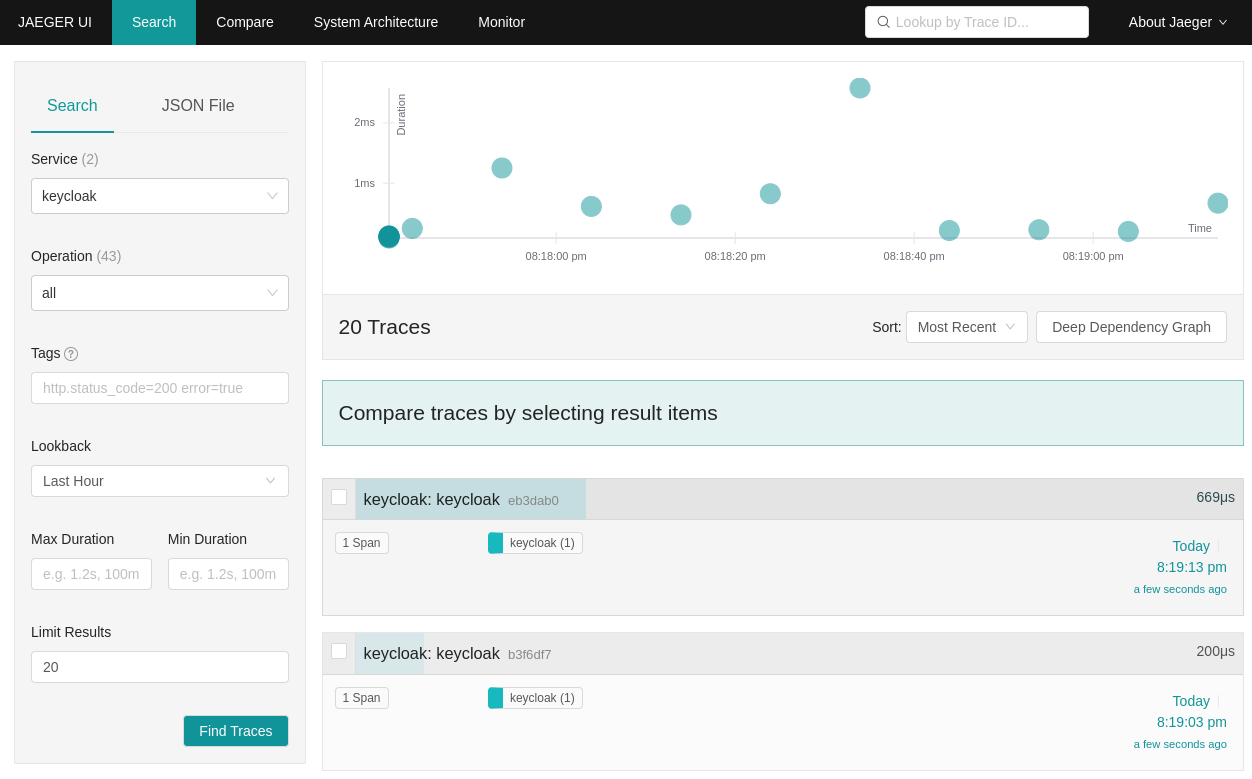

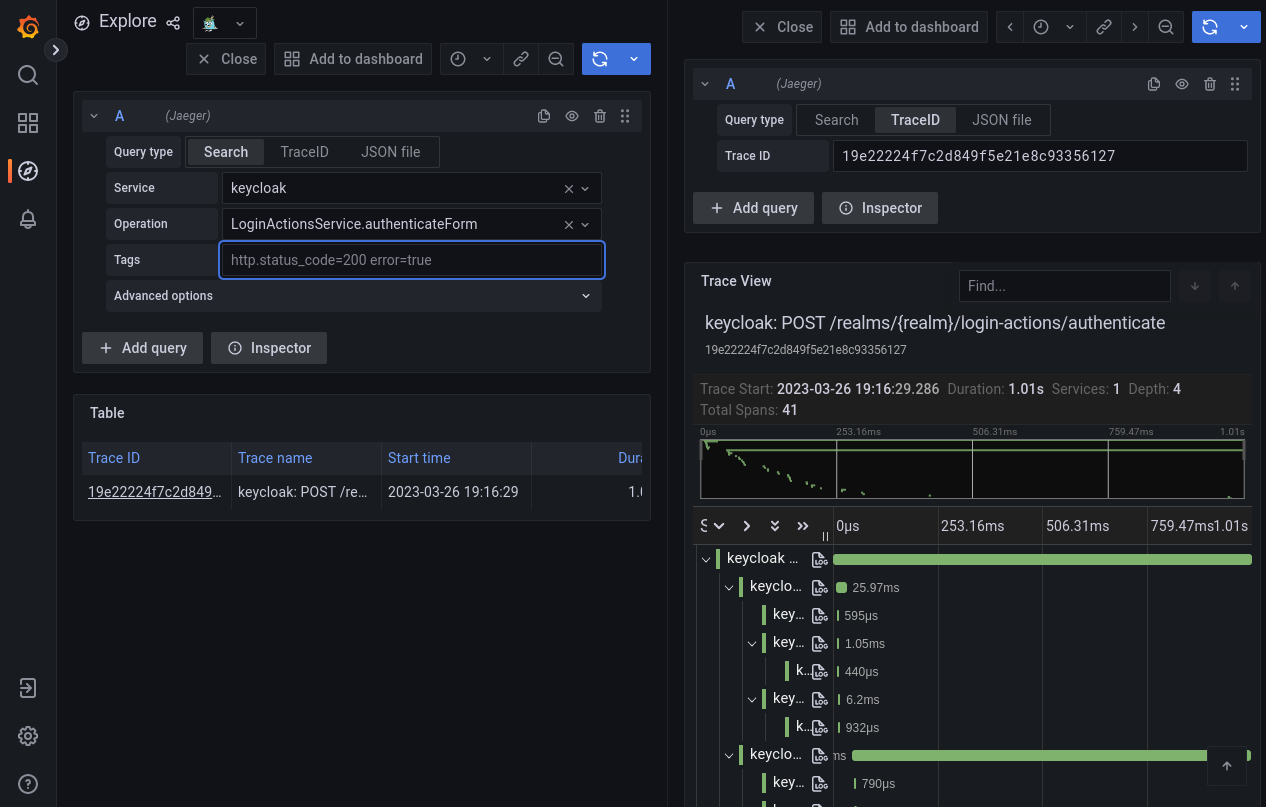

Use the browser to search for traces in Grafana via the Jaeger data source, or use the Jaeger UI directly.

When searching for traces in Grafana, select at least the service keycloak to see traces.

The results can be restricted further using the operation and the tags of the trace.

The Jaeger UI is similar. As in the Grafana UI, select the Keycloak service to before searching.

To find out more about the tool, visit the Jaeger homepage.

|

After running a search in the Jaeger UI, the search timestamp is fixed and encoded in the URL. Therefore, re-running the search or setting different filter options will not find traces which were created after running the first search. To start a search with the latest timestamp, click on Search in the menu toolbar. |